In this blog post, you will learn several new skills and concepts related to image classification in Keras with data fed through Tensorflow Datasets.

- TensorFlow Datasets provide a convenient way for us to organize operations on our training, validation, and test data sets.

- Data augmentation allows us to create expanded versions of our data sets that allow models to learn patterns more robustly.

- Transfer learning allows us to use pre-trained models for new tasks. Working on the coding portion of the Blog Post in Google Colab is strongly recommended. When training your model, enabling a GPU runtime (under Runtime -> Change Runtime Type) is likely to lead to significant speed benefits.

Overview

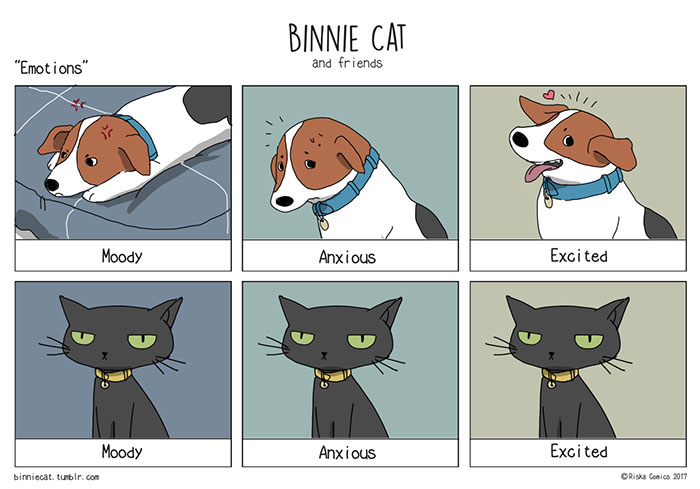

Can you teach a machine learning algorithm to distinguish between pictures of dogs and pictures of cats?

According to this helpful diagram below, one way to do this is to attend to the visible emotional range of the pet:

Unfortunately, using this method requires that we have access to multiple images of the same individual. We will consider a setting in which we have only one image for pet. Can we reliably distinguish between cats and dogs in this case?

Acknowledgment

Major parts of this Blog Post assignment, including several code chunks, are based on the TensorFlow Transfer Learning Tutorial. You may find that consulting this tutorial is helpful while completing this assignment, although this shouldn’t be necessary.

Instructions

1. Load Packages and Obtain Data

Start by making a code block in which you’ll hold your import statements. You can update this block as you go. For now, include

import os

from keras import utils

import tensorflow_datasets as tfdsNow, let’s access the data. We’ll use a sample data set from Kaggle that contains labeled images of cats and dogs.

Paste and run the following code block.

train_ds, validation_ds, test_ds = tfds.load(

"cats_vs_dogs",

# 40% for training, 10% for validation, and 10% for test (the rest unused)

split=["train[:40%]", "train[40%:50%]", "train[50%:60%]"],

as_supervised=True, # Include labels

)

print(f"Number of training samples: {train_ds.cardinality()}")

print(f"Number of validation samples: {validation_ds.cardinality()}")

print(f"Number of test samples: {test_ds.cardinality()}")By running this code, we have created Datasets for training, validation, and testing. You can think of a Dataset as a pipeline that feeds data to a machine learning model. We use data sets in cases in which it’s not necessarily practical to load all the data into memory.

Paste the following code into the next block. The dataset contains images of different sizes, so we resize them to a fixed size of 150x150.

resize_fn = keras.layers.Resizing(150, 150)

train_ds = train_ds.map(lambda x, y: (resize_fn(x), y))

validation_ds = validation_ds.map(lambda x, y: (resize_fn(x), y))

test_ds = test_ds.map(lambda x, y: (resize_fn(x), y))The next block is technical code related to rapidly reading data. If you’re interested in learning more about this kind of thing, you can take a look here. The batch_size determines how many data points are gathered from the directory at once.

from tensorflow import data as tf_data

batch_size = 64

train_ds = train_ds.batch(batch_size).prefetch(tf_data.AUTOTUNE).cache()

validation_ds = validation_ds.batch(batch_size).prefetch(tf_data.AUTOTUNE).cache()

test_ds = test_ds.batch(batch_size).prefetch(tf_data.AUTOTUNE).cache()Working with Datasets

You can get a piece of a data set using the take method; e.g. train_ds.take(1) will retrieve one batch (32 images with labels) from the training data.

Let’s briefly explore our data set. Write a function to create a two-row visualization. In the first row, show three random pictures of cats. In the second row, show three random pictures of dogs. You can see some related code in the linked tutorial above, although you’ll need to make some modifications in order to separate cats and dogs by rows. A docstring is not required.

Check Label Frequencies

The following line of code will create an iterator called labels_iterator.

labels_iterator= train_ds.unbatch().map(lambda image, label: label).as_numpy_iterator()Compute the number of images in the training data with label 0 (corresponding to "cat") and label 1 (corresponding to "dog").

The baseline machine learning model is the model that always guesses the most frequent label. Briefly discuss how accurate the baseline model would be in our case.

We’ll treat this as the benchmark for improvement. Our models should do much better than baseline in order to be considered good data science achievements!

2. First Model

Create a keras.Sequential model using some of the layers we’ve discussed in class. In each model, include at least two Conv2D layers, at least two MaxPooling2D layers, at least one Flatten layer, at least one Dense layer, and at least one Dropout layer. Train your model and plot the history of the accuracy on both the training and validation sets. Give your model the name model1.

To train a model on a Dataset, use syntax like this:

history = model1.fit(train_ds,

epochs=20,

validation_data=validation)Here and in later parts of this assignment, training for 20 epochs with the Dataset settings described above should be sufficient.

You don’t have to show multiple models, but please do a few experiments to try to get the best validation accuracy you can. Briefly describe a few of the things you tried. Please make sure that you are able to consistently achieve at least 55% validation accuracy in this part (i.e. just a bit better than baseline).

- In bold font, describe the validation accuracy of your model during training. You don’t have to be precise. For example, “the accuracy of my model stabilized between 65% and 70% during training.”

- Then, compare that to the baseline. How much better did you do?

- Overfitting can be observed when the training accuracy is much higher than the validation accuracy. Do you observe overfitting in

model1?

3. Model with Data Augmentation

Now we’re going to add some data augmentation layers to your model. Data augmentation refers to the practice of including modified copies of the same image in the training set. For example, a picture of a cat is still a picture of a cat even if we flip it upside down or rotate it 90 degrees. We can include such transformed versions of the image in our training process in order to help our model learn so-called invariant features of our input images.

- First, create a

keras.layers.RandomFlip()layer. Make a plot of the original image and a few copies to which RandomFlip() has been applied. Make sure to check the documentation for this function! - Next, create a

keras.layers.RandomRotation()layer. Check the docs to learn more about the arguments accepted by this layer. Then, make a plot of both the original image and a few copies to whichRandomRotation()has been applied. Now, create a newkeras.models.Sequentialmodel calledmodel2in which the first two layers are augmentation layers. Use aRandomFlip()layer and aRandomRotation()layer. Train your model, and visualize the training history.

Please make sure that you are able to consistently achieve at least 60% validation accuracy in this part. Scores of near 70% are possible.

Note: You might find that your model in this section performs a bit worse than the one before, even on the validation set. If so, just comment on it! That doesn’t mean there’s anything wrong with your approach. We’ll see improvements soon.

- In bold font, describe the validation accuracy of your model during training.

- Comment on this validation accuracy in comparison to the accuracy you were able to obtain with

model1. - Comment again on overfitting. Do you observe overfitting in

model2?

4. Data Preprocessing (MODIFIED 2/28)

Sometimes, it can be helpful to make simple transformations to the input data. For example, in this case, the original data has pixels with RGB values between 0 and 255, but many models will train faster with RGB values normalized between 0 and 1, or possibly between -1 and 1. These are mathematically identical situations, since we can always just scale the weights. But if we handle the scaling prior to the training process, we can spend more of our training energy handling actual signal in the data and less energy having the weights adjust to the data scale.

The following code will create a preprocessing layer called preprocessor which you can slot into your model pipeline.

i = keras.Input(shape=(150, 150, 3))

# The pixel values have the range of (0, 255), but many models will work better if rescaled to (-1, 1.)

# outputs: `(inputs * scale) + offset`

scale_layer = keras.layers.Rescaling(scale=1 / 127.5, offset=-1)

x = scale_layer(i)

preprocessor = keras.Model(inputs = i, outputs = x)I suggest incorporating the preprocessor layer as the very first layer, before the data augmentation layers. Call the resulting model model3.

Now, train this model and visualize the training history. This time, please make sure that you are able to achieve at least 80% validation accuracy.

- In bold font, describe the validation accuracy of your model during training.

- Comment on this validation accuracy in comparison to the accuracy you were able to obtain with

model1. - Comment again on overfitting. Do you observe overfitting in

model3?

5. Transfer Learning

So far, we’ve been training models for distinguishing between cats and dogs from scratch. In some cases, however, someone might already have trained a model that does a related task, and might have learned some relevant patterns. For example, folks train machine learning models for a variety of image recognition tasks. Maybe we could use a pre-existing model for our task?

To do this, we need to first access a pre-existing “base model”, incorporate it into a full model for our current task, and then train that model.

Paste the following code in order to download MobileNetV3Large and configure it as a layer that can be included in your model.

IMG_SHAPE = (150, 150, 3)

base_model = keras.applications.MobileNetV3Large(input_shape=IMG_SHAPE,

include_top=False,

weights='imagenet')

base_model.trainable = False

i = keras.Input(shape=IMG_SHAPE)

x = base_model(i, training = False)

base_model_layer = keras.Model(inputs = i, outputs = x)Now, create a model called model4 that uses MobileNetV3Large. For this, you should definitely use the following layers:

TheREMOVED 2/28: preprocessing layers are included inpreprocessorlayer from Part §4.MobileNetV3Large.- The data augmentation layers from Part §3.

- The

base_model_layerconstructed above. - A

Dense(2)layer at the very end to actually perform the classification.

Between 3. and 4., you might want to place a small number of additional layers, like GlobalMaxPooling2D or possibly Dropout. You don’t need a lot though! Once you’ve constructed the model, check the model.summary() to see why – there is a LOT of complexity hidden in the base_model_layer. Show the summary and comment. How many parameters do we have to train in the model?

Finally, train your model for 20 epochs, and visualize the training history.

This time, please make sure that you are able to achieve at least 93% validation accuracy. That’s not a typo!

- In bold font, describe the validation accuracy of your model during training.

- Comment on this validation accuracy in comparison to the accuracy you were able to obtain with

model1. - Comment again on overfitting. Do you observe overfitting in

model4?

6. Score on Test Data

Feel free to mess around with various model structures and settings in order to get the best validation accuracy you can. Finally, evaluate the accuracy of your most performant model on the unseen test_ds. How’d you do?

7. Write Your Blog Post

Turn your work into a tutorial Blog Post on the topic of image classification and transfer learning. Make sure to include all the code, summaries, and plots that you created. You might find it useful to refer to the Keras tutorial linked above, but your entire post should be in your own original words. Feel free to link your reader to any resources that you found helpful.

Specifications

Please remember that you must meet all specifications in order to receive credit on the first submission!

Format

- There is no autograder for this homework. Please submit the PDF printout of your blog post to the “pdf” window and any code you wrote to the “files” window – including

index.ipynbandindex.py.

Coding Problem

- The visualization in Part 1 is implemented using a function and shows labeled images of cats in one row and labeled images of dogs in another row.

- There are two visualizations in Part 3 showing the results of applying

RandomFlipandRandomRotationto an example image. - Models 1, 2, 3, and 4 are logically constructed and obtain the required validation accuracy in each case:

- Model 1 should obtain at least 55% validation accuracy.

- Model 2 should obtain at least 60% validation accuracy.

- Model 3 should obtain at least 80% validation accuracy.

- Model 4 should obtain at least 93% validation accuracy.

- The training history is visualized for each of the four models, including the training and validation performance.

- The most performant model is evaluated on the test data set.

Style and Documentation

- Code throughout is written using minimal repetition and clean style.

- Docstrings are not required in this Blog Post, but please make sure to include useful comments and detailed explanations for each of your code blocks.

Writing

- The blog post is written in tutorial format, in engaging and clear English. Grammar and spelling errors are acceptable within reason.